New Critical AI Studies in the Digital Humanities

Text is everywhere around us. It is embedded in government and corporate reports. Text fills the pages of newspapers, shapes emails, and makes up the content of blog entries. Text is not only a product of human communication and expression, but is also increasingly generated by Artificial Intelligence (AI). Certainly, computational techniques for engaging text serve researchers well in our increasingly text producing world.

This course explores how we create meaning from textual data, situating course themes within the evolving fields of critical AI studies and the digital humanities. In doing so, it combines two mechanisms into a method of critical inquiry: (a) the use of generative AI for text analysis and (b) the representation of text data as knowledge graphs that capture relationships between concepts, cultural context, and relevant metadata.

Why are these mechanisms important? AI offers new opportunities for the computational analysis of text. Today, researchers can simply prompt large language models (LLMs) to generate narrative summaries or the code needed for an analysis. However, this process is not clearly explainable, and researchers risk generating responses that lack critical insight into the underlying data. Knowledge graphs, on the other hand, have long provided a means to store and represent nuanced information. Paired with LLMs, they add explainability to generative responses by linking them to a representation of the original data. This approach not only allows researchers to trace generative responses back to their sources, but can also be used to enrich generative responses with the nuanced context of a knowledge graph.

When both mechanisms are within a researcher’s grasp (LLMs and knowledge graphs), AI offers a new, critical process through which we can produce knowledge.

Text Mining as Humanities Method

Computer-powered methods are changing the way that we access information about society. New methods help us to detect change over time, to identify influential figures, and to name turning points. What happens when we apply these tools to a million congressional debates or tweets?

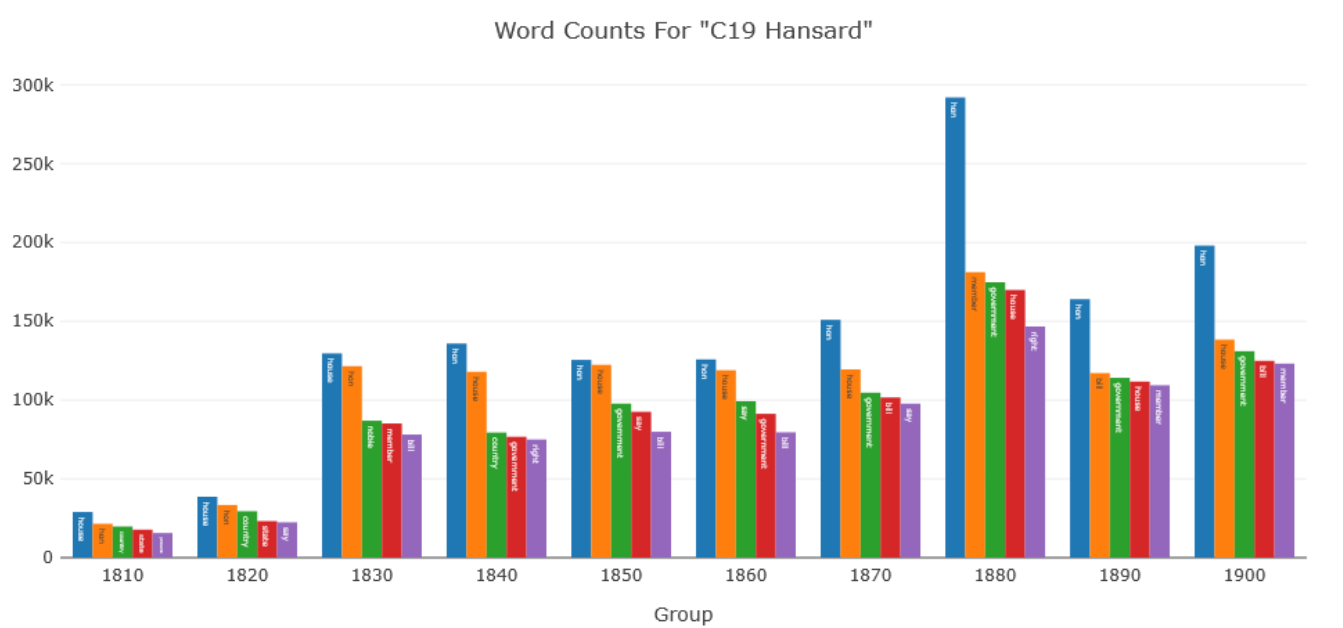

This course teaches students how to use computational methods to analyze texts, combining digital tools with humanistic inquiry. Students begin by learning foundational skills in Jupyter Notebooks, then build toward techniques like word counting, n-grams, lemmatization, and named entity recognition. Along the way, they explore how to track changes in language over time, measure distinctiveness, and model word meaning through word-context vectors. The course emphasizes interpretation, showing students how to use these methods to uncover patterns, shifts, and relationships in discourse that would be difficult to see through traditional reading alone.

Because this class encourages exploring discourses that have shaped our culture throughout time, students have access to a diverse range of data sets for their own research projects. These data sets include: Reddit posts from the Push Shift API from 2008 to 2015; the 19th-century Hansard Parliamentary debates of Great Britain; the Stanford Congressional Records; the Dallas, Texas and Houston, Texas City Council Minutes; literature from Project Gutenberg; metadata from the NovelTM Datasets for English-Language Fiction, 1700–2009; and corporate reports from EDGAR, the Electronic Data Gathering, Analysis, and Retrieval system.